# -*- coding: utf-8 -*-

"""

Created on Thu Jan 7 02:44:53 2020

@author: honey

学习项目实战2,爬取本博客文章访问排名

"""

import requests

from bs4 import BeautifulSoup

import pandas as pd

#获取采集的链接

def get_url(n):

urllist = []

for i in range(n):

urllist.append('https://qinzc.me/page/%i' % (i+1))

return urllist

#采集数据

def get_data(url,head,c_dic):

#访问链接

ri = requests.get(url, headers=head, cookies=c_dic)

#解析html数据

soup = BeautifulSoup(ri.text, 'lxml')

lis = soup.find('div',id="content").find_all('article',class_="post-list")

#数据序列化

data = []

for li in lis:

data_dict = {}

data_dict['标题'] = li.h2.a.text

data_dict['日期'] = li.find('span', class_="ptime").text

data_dict['类别'] = li.find('span', class_="pcate").text.replace('\n','').replace(' ','')

data_dict['人气'] = li.find('span', class_="pcomm").text.replace('\r','').replace('\n','').replace(' ','')

data_dict['简介'] = li.find('div', class_="post-excerpt").text

data.append(data_dict)

return data

if name == "main":

url = get_url(23) #获取需要采集的链接, 获取分页网址

#获取Agent,cookies

cookie = 'commentposter=%E5%8C%BF%E5%90%8D; UM_distinctid=16e12947eda616-0d920e3bb94c44-7711439-1fa400-16e12947edba2e; CNZZDATA1260945619=120386725-1572267166-%7C1578310466'

head = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36'}

##生成cookies字典表

c_dic = {}

cookies_tmp = cookie.split('; ')

for n in cookies_tmp:

c_dic[n.split('=')[0]] = n.split('=')[1]

#开始正式采集数据

data_lst = []

error_lst = []

for u in url:

try:

data_lst.extend(get_data(u,head,c_dic))

print('成功采集数据 %i' % len(data_lst))

except:

error_lst.append(u)

print('数据采集失败,数据网址为:',u)

print(data_lst)

#获取数据信息页面网址

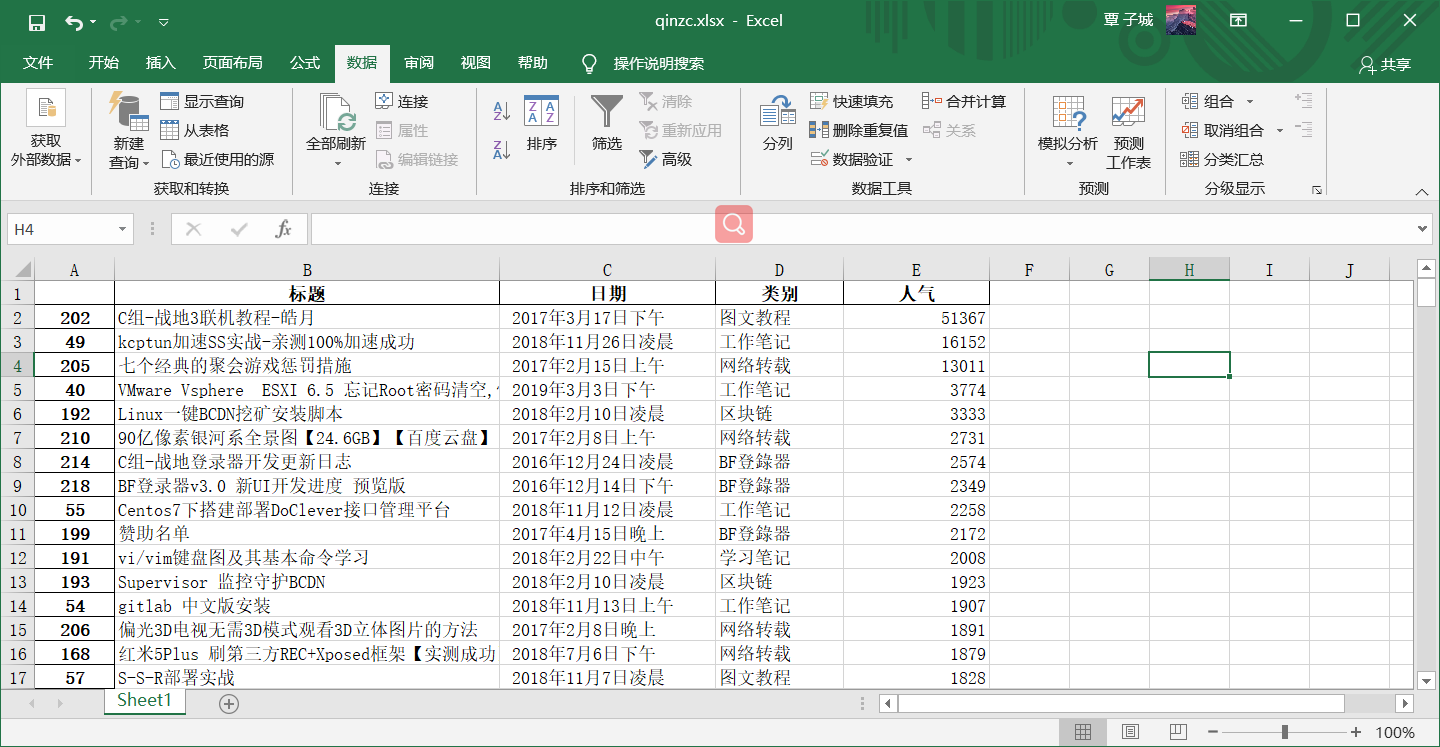

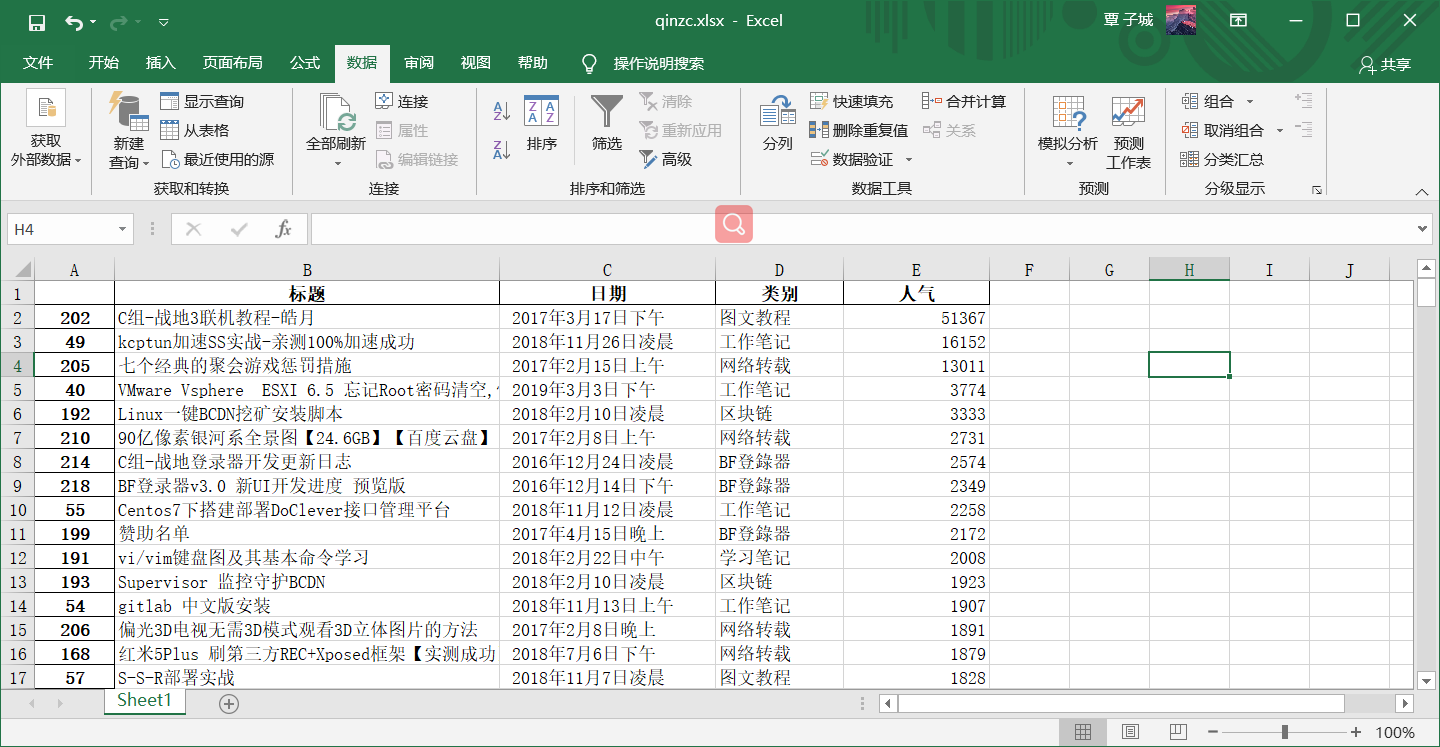

datadf = pd.DataFrame(data_lst)

# 数据清洗

datadf.to_excel('D:/python/爬虫/qinzc.xlsx')

# 导出excel

桂ICP备16010384号-1

桂ICP备16010384号-1

停留在世界边缘,与之惜别